InfoJobs is the first privately owned career network in Europe and one of the most popular sites on the Internet. They help professionals and companies meet each other through the website.

The website maintains an average of 50k active jobs and the number of new job posts exceeds 5k each week. Obviously for this website job pages are the majority of their indexable pages. However InfoJobs was experiencing two critical problems related to their job pages.

- A very low rate of indexing

- Delay on indexing – Sometimes job opportunities appear on google search results even after the job has expired.

They needed a solution.

Expertise Used

- SEO

- R&D

- Google Cloud and Related Google Products

- Python Language

1. Challenge

After conducting the status analysis, we identified that the HTML structure was technically well enough for indexing and they were pretty good for search engine crawlers to crawl and index. However XML sitemap did not consist of all the job URLs which could potentially lead to a low rate of crawling. Implementing a proper XML sitemap with all job URLs is the traditional way of letting Google crawler know about all the pages.

A job is a short-lived entity and it has an expiry date which is the same for the job page. So the SEO challenge here was how can we guarantee that the job page is indexed and searchable on google before it expires.

On the other hand, our technical team did not have access to the website code base in order to tweak the current XML sitemap generation logic or access URLs from the database.

2. The approach.

SEO expertise and R&D team worked together to analyze this situation and explore the best solution for the client. By considering the facts; short-lived page and job validity expiration we needed a faster way of indexing. Hence we prioritized submitting URLs over showing URLs via sitemaps. We know that there is a feature in Google search console to submit URLs for indexing. For a few tens of URLs we can use that approach but considering the volume of daily URLs we prioritized implementing Indexing API.

However it was critical that we needed to know all the URLs that we should submit on a daily basis. By considering the fact that we cannot tweak in the current code base plus cannot access the database for fetching URLs of jobs, we listed three possible options.

- Scraping the site daily

- Read via data API

- Read from downloaded job data feed

We considered few key aspects to prioritize the best approach to read URLs

- Data validity – Accurately read active jobs and new jobs daily basis

- Reading speed – Faster the better

- Load to the current application – Minimum access to the current web

- Processing performance

By considering all of these together, our approach was to use the InfoJobs data feed and batch process all job URLs.

By finalizing this step, the solution was ready for the software application design. Our technical team architectured and crafted the application using the latest data science programming language python. Within a very short period of time we could deliver the application to production and it started to work smoothly and efficiently.

2. The approach.

SEO expertise and R&D team worked together to analyze this situation and explore the best solution for the client. By considering the facts; short-lived page and job validity expiration we needed a faster way of indexing. Hence we prioritized submitting URLs over showing URLs via sitemaps. We know that there is a feature in Google search console to submit URLs for indexing. For a few tens of URLs we can use that approach but considering the volume of daily URLs we prioritized implementing Indexing API.

However it was critical that we needed to know all the URLs that we should submit on a daily basis. By considering the fact that we cannot tweak in the current code base plus cannot access the database for fetching URLs of jobs, we listed three possible options.

- Scraping the site daily

- Read via data API

- Read from downloaded job data feed

We considered few key aspects to prioritize the best approach to read URLs

- Data validity – Accurately read active jobs and new jobs daily basis

- Reading speed – Faster the better

- Load to the current application – Minimum access to the current web

- Processing performance

By considering all of these together, our approach was to use the InfoJobs data feed and batch process all job URLs.

By finalizing this step, the solution was ready for the software application design. Our technical team architectured and crafted the application using the latest data science programming language python. Within a very short period of time we could deliver the application to production and it started to work smoothly and efficiently.

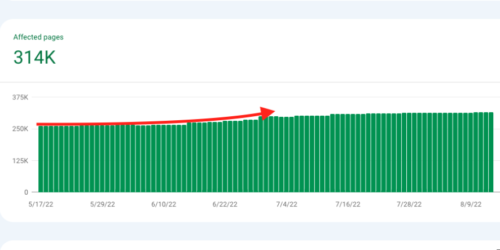

3. Results

High index rate – The new application confirms 100% indexing upto 2000 pages per day. The limitation is because of Google daily quota which we could increase in the future as per daily count.

Faster indexing – Generally a new page takes at least a few days to be read by google even though proper SEO is done. However we could convert the crawling delay from days to hours. With the new approach crawling is just a matter of hours and faster indexing (Majority within the same day in few hours)

4. Benefits

- Cost effectiveness

- Processing efficiency

- Opportunity to explore more data processing capabilities

- Zero effect to the current website (Code/database and it’s traffic)

4. Benefits

- Cost effectiveness

- Processing efficiency

- Opportunity to explore more data processing capabilities

- Zero effect to the current website (Code/database and it’s traffic)